ANOVA, an acronym for “Analysis of Variance“, is a statistical technique used to evaluate whether there are significant differences between the means of three or more independent groups. In other words, ANOVA compares the means of different groups to determine whether at least one of them is significantly different from the others.

The ANOVA technique

Analysis of Variance (ANOVA) is a statistical technique based on the decomposition of the variability in data into two main components:

- variability between groups

- variability within groups

Imagine that you have several people assigned to different groups and that you measure a variable of interest for each person. ANOVA asks whether the differences we observe between the mean values of these variables across groups are larger than what might be expected by simple chance.

To do this, ANOVA uses a test called the T-test, which compares the variance between groups to the variance within groups. If the variability between groups is significantly greater, this suggests that at least one of the groups is different from the others in terms of the measured variable.

The null hypothesis of ANOVA states that there are no significant differences between the group means, while the alternative hypothesis suggests that at least one group is significantly different. The decision to reject or accept the null hypothesis depends on a p-value associated with the T-test. If the p-value is low enough (generally below 0.05), one can reject the null hypothesis.

It is important to note that ANOVA requires that the samples within each group are independent and that the data distributions are approximately normal. These are the main concepts on which ANOVA is based to determine whether the differences observed between groups are statistically significant or simply due to chance.

The T Test

The t-test, is a statistical technique used to evaluate whether there are significant differences between the means of two groups. There are several variations of the t-test, but the two most common are the independent samples t-test and the dependent (or paired) samples t-test.

Here’s how each variant works:

The t-test, or t-test, is a statistical technique used to evaluate whether there are significant differences between the means of two groups. There are several variations of the t-test, but the two most common are the independent samples t-test and the dependent (or paired) samples t-test.

Here’s how each variant works:

- Null and Alternative Hypothesis:

- Null Hypothesis (H0): There are no significant differences between the means of the two groups.

- Alternative Hypothesis (H1): There are significant differences between the means of the two groups.

- Calculation of the t-value:

The t-value is calculated using the difference between the means of the two groups normalized for the variability of the data.

![]()

Where:

and

and  are the averages of the two groups.

are the averages of the two groups. and

and  are the standard errors of the two groups.

are the standard errors of the two groups. and

and  are the dimensions of the two samples.

are the dimensions of the two samples.

- Determination of Significance:

You compare the calculated t-value to a Student’s t-distribution or use statistical software to obtain the associated p-value.

- Decision:

If the p value is less than the predetermined significance level (usually 0.05), the null hypothesis can be rejected in favor of the alternative hypothesis, suggesting that there are significant differences between the means of the two groups.

T-Test for Dependent Samples:

The dependent samples t-test is used when measurements are paired, for example, when measuring the same thing on paired individuals before and after a treatment.

The calculation of the t-value is similar, but the difference between the pairs of observations is considered:

![]()

Where:

is the average of the differences.

is the average of the differences. is the standard deviation of the differences.

is the standard deviation of the differences. is the number of matched pairs of observations.

is the number of matched pairs of observations.

The process of determining significance and making the decision is similar to the independent samples t-test.

In both cases, the t test provides an assessment of the likelihood that the observed differences between groups are due to chance, and the p value is compared to the significance level to make a statistical decision.

If you want to delve deeper into the topic and discover more about the world of Data Science with Python, I recommend you read my book:

Fabio Nelli

Calculating the p-value

I apologize for the confusion. Calculating the p-value in a t-test involves comparing the calculated t-value to the Student’s t-distribution and determining the probability of obtaining a t-value at least that extreme under the null hypothesis. Here’s how it’s done:

- Calculating the t-value: Calculate the t-value using the appropriate formula for the type of t-test you are running (independent-samples t or dependent-samples t).

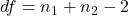

- Degrees of Freedom: Calculate the degrees of freedom for your test. For the independent samples t test, the degrees of freedom are

, where

, where  and

and  are the dimensions of the two samples. For the dependent-samples t test, the degrees of freedom are

are the dimensions of the two samples. For the dependent-samples t test, the degrees of freedom are  , where

, where  is the number of matched pairs of observations.

is the number of matched pairs of observations. - Viewing the Student’s t-Distribution: View the Student’s t-distribution with the calculated degrees of freedom. This is a standard table or can be obtained using statistical software.

- Comparing the t-value with the Table: Find the critical value of the t-distribution corresponding to your significance level (for example, 0.05). This will be the cutoff point beyond which we reject the null hypothesis.

- Calculating the p-Value: See if your t-value exceeds the critical value. If the t-value is more extreme (larger or smaller) than the critical value, you can calculate the p-value as the probability of getting a t-value at least that extreme in the Student’s t-distribution.

To simplify the process, many statistical analyzes are performed today using statistical software such as R, Python with libraries such as SciPy or StatsModels, or dedicated programs such as SPSS. These tools automatically calculate the p-value for you, making statistical analysis easier.

A simple example of ANOVA with SciPy

Here is an example of how to run a t-test for three independent groups using the SciPy library in Python:

import scipy.stats as stats

# Data from the three groups

group1 = [22, 25, 28, 32, 35]

group2 = [18, 24, 30, 28, 34]

group3 = [15, 20, 25, 30, 35]

# Perform the t-test for three independent groups

t_statistic, p_value = stats.f_oneway(group1, group2, group3)

# View the results

print(f"t-statistic: {t_statistic}")

print(f"p-value: {p_value}")

# Comparison with the common significance level (0.05)

if p_value < 0.05:

print("You can reject the null hypothesis: there are significant differences between at least two groups.")

else:

print("There is insufficient evidence to reject the null hypothesis.")In this example, group1, group2, and group3 are three sets of data. The ANOVA (Analysis of Variance) test is performed using SciPy’s stats.f_oneway, which is the appropriate method for comparing the means of three or more groups. The result includes the F-statistic value and the p-value.

Executing you will get the following result:

t-statistic: 0.341732283464567

p-value: 0.7172326913427948

There is insufficient evidence to reject the null hypothesis.Make sure you have SciPy installed before running this code:

pip install scipyYou can adapt this code according to your specific needs and data.

A simple example of ANOVA with StatsModels

Here is an example of how to run an ANOVA test for three independent groups using the StatsModels library in Python:

import statsmodels.api as sm

from statsmodels.formula.api import ols

import pandas as pd

# Data from the three groups

group1 = [22, 25, 28, 32, 35]

group2 = [18, 24, 30, 28, 34]

group3 = [15, 20, 25, 30, 35]

# Creating a DataFrame

data = pd.DataFrame({'Values': group1 + group2 + group3,

'Groups': ['Group1'] * 5 + ['Group2'] * 5 + ['Group3'] * 5})

# Run the ANOVA model

model = ols('Values ~ Group', data=data).fit()

anova_table = sm.stats.anova_lm(model)

# View the ANOVA table

print(anova_table)

# Comparison with the common significance level (0.05)

if anova_table['PR(>F)'][0] < 0.05:

print("You can reject the null hypothesis: there are significant differences between at least two groups.")

else:

print("There is insufficient evidence to reject the null hypothesis.")In this example, I’m using the ols module of StatsModels to define an ordinary linear regression model. The formula ‘Values ~ Group’ indicates that we are trying to explain the variability in the “Values” data based on the categorical variable “Group”. The anova_lm method calculates the analysis of variance.

Running the code you will get the following result:

df sum_sq mean_sq F PR(>F)

Gruppo 2.0 28.933333 14.466667 0.341732 0.717233

Residual 12.0 508.000000 42.333333 NaN NaN

Non c'è sufficiente evidenza per respingere l'ipotesi nulla.Make sure you have StatsModels installed before running this code:

pip install statsmodelsYou can adapt this code according to your specific needs and data.

The different types of ANOVA

There are several types of ANOVA, designed to meet the specific needs of different data types and study designs. The main types of ANOVA include:

- One-factor ANOVA: Used when there is only one factor or independent variable. For example, you could use it to compare the averages of three or more groups of participants.

- Two-factor ANOVA: Involves two independent variables (factors). It can be further divided into two-way ANOVA with repeated measures and without repetitions.

- Multi-factor ANOVA: It involves three or more independent variables. It is more complex than two-factor ANOVA and can handle situations where there are multiple factors influencing the dependent variable.

- Repeated measures ANOVA: Used when the same experimental units are measured multiple times. It is a form of ANOVA that takes into account the correlation between repeated measurements on the same subject.

- Multivariate ANOVA (MANOVA): Extension of ANOVA involving multiple dependent variables. It is used when you want to simultaneously examine differences between groups on multiple dependent variables.

- Randomized block ANOVA: Used when individuals are divided into homogeneous blocks and treatments are randomly assigned within each block.

These are just a few examples and there are many variations and specific adaptations for different research contexts. The choice of the type of ANOVA depends on the nature of the data and the experimental design of the study.